Easily load large amounts of client data into the Kurtosys App using supported file formats. The data is then consumed and loaded by Kurtosys-built loaders, and used in client documents, portals, or websites.

File Formats

Supported formats:

CSV (Comma Separated Values)

XML (eXtensible Markup Language)

JSON (JavaScript Object Notation)

XLSX (Excel Spreadsheet)

Non-supported formats:

DOC/DOCX (Word documents)

PDF

PPT/PPTX (Powerpoint presentations)

JPEG, PNG, etc (Images)

Do:

- It is important to use easily understandable and consistent column headers for your data

- You should ensure that each column contains only one type of data

Don't:

- Mix data types in one column

- Leave column headers blank ie unnamed columns within the file

Entity Identifiers

Entities refer to any share class, fund, benchmark, or account. To facilitate accurate data mapping and retrieval, every record for any entity in a data file should have a unique identifier. This enables seamless association of data during the loading process.

Common identifiers include ISINs, Tickers, Fund IDs, CUSIPs, and Account IDs.

Each entity and benchmark must have a unique identifier, and there should be a logical mapping that links them together.

Do:

- Ensure Uniqueness:

Each record must have a unique primary key - Use Stable Attributes:

Select attributes that won’t change over time - Keep it Simple:

Prefer a single code rather than a complex string

Don't:

- Use Changeable Data:

Avoid attributes that are likely to change - Overload Keys with Meaning:

Keep identifiers free from business logic - Use Large or Complex Keys:

They can lead to mapping issues

Examples of good identifiers:

- BIALX (Fund ID)

- US1234567890 (ISIN)

- BENCH123456 (Benchmark ID)

Examples of bad identifiers:

- Fixed Income Composite-Fund (Fund name)

- Bloomberg U.S. Treasury Bills (1-3M) Index

(Benchmark name)

Reporting / As Of Dates

Each set of data should include a consistent date across all records.

This maintains uniformity across all datasets for the same reporting period. Consistency in reporting dates enables easy reference and alignment across multiple datasets.

Do:

- Use Clear, Consistent Date Formatting:

Ensure all date fields follow a consistent format (e.g., YYYY-MM-DD) - Include Reporting Dates in Every Record:

Every data entry should have a corresponding date to indicate the reporting period.

Don't:

- Use Inconsistent Date Formats:

Avoid mixing date formats within the same column. It will result in errors in processing - Omit Dates or Use Incorrect Headers:

Each record must have a date, and the column header should clearly indicate that it represents the date.

Example of good reporting dates:

ID | Name | Reporting Date | Value |

|---|---|---|---|

1 | Name A | 2023-12-31 | 100 |

2 | Name B | 2023-12-31 | 200 |

3 | Name C | 2023-12-31 | 300 |

Example of bad reporting dates:

ID | Name | Value | |

|---|---|---|---|

1 | Name A | 2023-12-31 | 100 |

2 | Name B | 12-31-2023 | 200 |

3 | Name C | 31/12/2023 | 300 |

Consistent Data Structures

Ensure that data structures remain consistent over time. Do not add, remove, or rearrange columns from one month to the next without notifying Kurtosys about the change.

When data structures remain unchanged, it simplifies the development of automated processes, prevents disruptions in data processing, and reduces the need for frequent adjustments which can become costly over time.

Do:

- Preserve Data Types:

Ensure that the data type for each column remains the same across different reporting periods - Keep Column Order Unchanged:

Do not rearrange columns from one reporting cycle to the next to avoid confusion and errors in data handling - Use Formulas in Files:

If formulas are used to calculate values, it is crucial to replace them with the corresponding values

Don't:

- Add or Remove Columns:

Do not introduce new columns or remove existing ones between reporting cycles without notifying Kurtosys of the change, as it can disrupt data processing routines - Modify Data Types:

Do not change the data format or type within a column (e.g. from numeric to string or vice versa) - Leave Formulas in Files:

Files with formulas will load the formula as data instead of the resulting value

Example of a good data structure:

ID | Name | Reporting Date | Value |

|---|---|---|---|

1 | Name A | 2023-12-31 | 100 |

2 | Name B | 2023-12-31 | 200 |

3 | Name C | 2023-12-31 | 300 |

4 | Name D | 2023-12-31 | 250 |

Example of a bad data structure:

ID | Name | Report Date | Value | Comment |

|---|---|---|---|---|

1 | Name A | 2023-12-31 | 100 | New |

2 | Name B | 2023-12-31 | Two hundred | Load |

3 | Name C | 2023-12-31 | 300 | Delete |

4 | Name D | 2023-12-31 | =100+150 | New |

Consistency in File Names and Types

It’s important to maintain consistency in data structures over time. One way to ensure this is by establishing consistent naming conventions for file names. This will help in correctly identifying files for processing.

Do:

- Maintain File Naming Convention:

Consistently adhere to the established file naming convention to guarantee successful validation and loading of the file - Maintain File Type:

Consistently adhere to the established file type to guarantee successful validation and loading of the file

Don't:

Change File Naming Convention:

Ensure the file name remains unchanged between reporting cycles to prevent validation errorsChange File Type:

Ensure the file type remains unchanged between reporting cycles to prevent validation errors

Example of good file names and types over time:

Date received | File name | File type |

|---|---|---|

1 Apr ’23 | monthly_returns_20240331.csv | CSV |

1 May ’23 | monthly_returns_20240430.csv | CSV |

1 Jun ’23 | monthly_returns_20240531.csv | CSV |

Example of bad file names and types over time:

Date received | File name | File type |

|---|---|---|

1 Apr ’23 | monthly_returns_20240331.xlsx | XLSX |

1 May ’23 | monthly_20240430.csv | CSV |

1 Jun ’23 | monthly_returns_.xls | XLS |

Data Validation Checks

It is important to perform detailed checks on the information provided to ensure that it is accurate, complete, and trustworthy. This will help to spot and correct any mistakes, discrepancies or irregularities in the data. By validating the data against predetermined standards and guidelines, we can assure its dependability and appropriateness for examination and handling.

Validation checks can include:

- Check for duplicates

- Check column formats

- Check column count and order

- Check file types

- Check that each record has a unique identifier

- Check data types

- Check file names

Common Mistakes Made

It is recommended to avoid manual data processing, which increases the risk of errors and inconsistencies. Instead, automated processes should be used for successful data ingestion and loading.

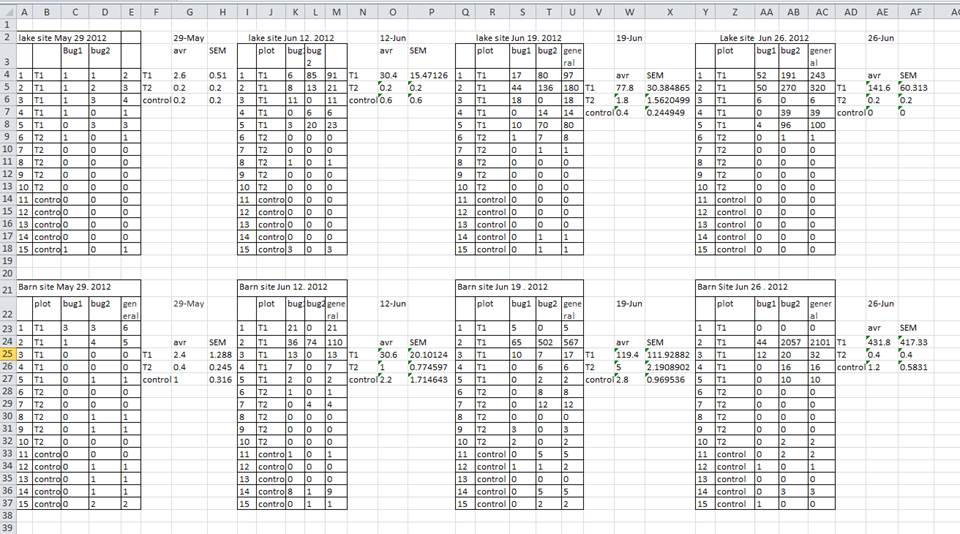

Multiple Tables per Page

A common mistake is creating multiple data tables within a single spreadsheet as show in the image.

A computer will rigidly interpret anything in the same row as belonging to the same observation.

Rather consolidate the tables into one. This will ensure a consistent data structure, and avoid errors when looking up data from different tables.

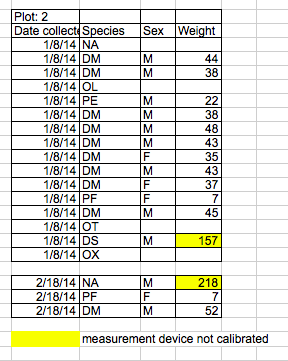

Using Problematic Null or Blank Values

When working with data in a file, it is crucial to distinguish between a zero and a blank cell.

A zero represents a valid measurement, whereas a blank cell indicates that no measurement was taken. In such cases, the computer interprets the blank cell as an unknown value, also known as a null value.

It is important to accurately record zeros as zeros and null values as nulls. Any other substitution for null values should be avoided. The table below shows common substitutions that should not be used.

Data Value | Problem | Recommendation |

|---|---|---|

‘0’ | Indistinguishable from a true zero if used to fill blanks in the data | Avoid unless a true zero |

‘NA’, ‘na’, ‘N/A’ or similar | Causes problems with data-type validation (turns a numerical column into a text column) | Avoid – leave blank |

‘NULL’ | Can cause problems with data type validation | Avoid – leave blank |

‘No data’ | Can cause problems with data type validation | Avoid – leave blank |

‘-‘,’+’,’.’ | Can cause problems with data type validation | Avoid – leave blank |

‘ ‘ (An empty space) | Can cause problems with data type validation | Avoid – leave blank |

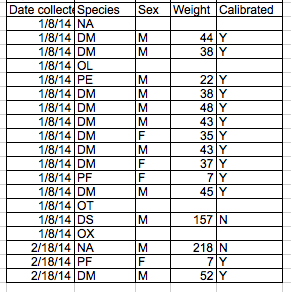

Using Formatting to Convey Information

A common example of using formatting to convey information is to highlight cells in a specific color that you want to be dealt with differently than others.

Another example is to leave a blank row to indicate a separation in the data. However, it is important to note that both of these highlighting approaches can cause problems since they are undetectable to computers.

Don't do this:

Do this:

Merging Cells or Unnecessary Aesthetic Formatting

When formatting a worksheet, avoid merging cells and using borders to separate data as it can impede the computer’s ability to see associations in the data. Merging cells cause column headers to disappear making it difficult to load data.

Borders alone will not be enough to indicate the start or end of a particular dataset. Rather place different tables on different tabs.

Placing Units in Cells

Units should be consistent and not included in data cells. Instead of noting “$5MM” in a cell, just write “5” and specify the unit in the column header or a separate column to avoid analysis complications.

Using Problematic Field or Column Names

For easy data analysis, use descriptive yet concise column names. Avoid spaces, numbers at the start, and special characters.

Use underscores or CamelCase for multi-word names to maintain readability and data integrity.

Avoid | Alternative | Reason |

|---|---|---|

MaxPercentage ( % ) | max_percentage | Uses a special character ( % ) |

Mean_growth/year | mean_yr_growth | Uses a special character ( / ) |

Annual returns | annual_returns | Uses a blank character |

3 Year Returns | returns_3yr | Uses a blank character and starts with a number |